Controversial Uses Of AI For Legacy Characters

The use of artificial intelligence to recreate the voices of legacy characters has become a significant point of debate in the entertainment industry. While some fans appreciate the ability to see iconic figures return to the screen others argue that these digital resurrections lack the soul of a human performance. Studios often cite the preservation of franchise continuity as a primary reason for using synthetic audio technology. As the technology continues to advance the legal and ethical questions surrounding the rights of actors and their estates remain a central focus for Hollywood.

‘The Mandalorian’ (2019–)

Mark Hamill returned as a younger Luke Skywalker in the season two finale through visual effects and synthesized audio. The production team utilized Respeecher technology to create a voice that mimicked the actor from the original trilogy era. This decision sparked a debate among fans regarding the ethics of replacing live performances with digital recreations. The technology analyzed archival recordings to produce a performance without any new lines recorded by Hamill himself.

‘The Book of Boba Fett’ (2021–2022)

The return of Luke Skywalker in this series further refined the use of AI voice synthesis to portray the character in his prime. Developers used an algorithm to process old radio broadcasts and film takes to generate the dialogue. Critics pointed out that the synthetic voice lacked the emotional cadence and natural inflections of a human actor. The project highlighted the growing trend of studios relying on digital assets rather than casting new actors for legacy roles.

‘Obi-Wan Kenobi’ (2022)

James Earl Jones officially retired from voicing Darth Vader and authorized Lucasfilm to use AI to keep the character alive. The company used software to recreate the menacing tone of the Sith Lord from his appearances in the late seventies. While the family of the actor supported the move the transition raised questions about the future of voice acting in major franchises. This instance marked one of the first times a legendary performer explicitly signed over their vocal likeness for future digital use.

‘Top Gun: Maverick’ (2022)

Val Kilmer reprised his role as Tom Iceman Kazansky despite losing his natural speaking voice to throat cancer. The production collaborated with Sonantic to create an AI model that could speak in the distinctive style of the actor. This technology used years of archival footage to synthesize a performance that allowed the character to have a meaningful interaction with Maverick. The use of AI in this context was seen by many as a compassionate application of the technology to honor a legacy.

‘Alien: Romulus’ (2024)

The film featured a synthetic character named Rook who shared the likeness and voice of the late actor Ian Holm. Filmmakers used generative AI and facial scanning to recreate the appearance of the science officer from the original 1979 movie. The estate of the actor gave permission for the recreation but the move still drew significant criticism from audiences. Many viewers expressed discomfort with the digital resurrection of a performer who passed away years prior to the production.

‘Rogue One: A Star Wars Story’ (2016)

This prequel film used digital technology to bring Grand Moff Tarkin back to the screen decades after the death of Peter Cushing. Guy Henry provided the physical performance while a synthetic process helped match the vocal patterns of the original actor. The inclusion of the character was a central point of the plot but ignited a lasting controversy over the rights of deceased performers. It served as a landmark case for the industry regarding the use of posthumous digital doubles in ‘Rogue One’ and future projects.

‘Ghostbusters: Afterlife’ (2021)

The character of Egon Spengler appeared as a spectral figure in the climax of the film to assist the new generation of heroes. Since Harold Ramis had passed away the production used a combination of body doubles and digital tools to recreate his presence. While the focus was on the visual likeness the audio elements were carefully managed to evoke the original character in ‘Ghostbusters Afterlife’. This creative choice aimed to provide closure for fans but also raised the standard ethical questions about digital necromancy in film.

‘The Flash’ (2023)

The superhero film featured several digital cameos of past actors including Christopher Reeve and George Reeves as different versions of Superman. The production team used computer generated imagery and synthetic audio to place these legacy stars within the multiversal sequence. While the sequence was intended to honor the history of the franchise it received backlash for the quality and the ethics of the digital recreations. This instance in ‘The Flash’ highlighted the ongoing debate about the use of likenesses of actors who can no longer provide consent for new roles.

‘Road House’ (2024)

The remake of the classic action film faced a lawsuit from the original screenwriter R. Lance Hill regarding the use of AI during the production. The legal complaint alleged that the studio used generative technology to recreate the voices of the actors to finish ‘Road House’ during the SAG AFTRA strike. While the studio claimed that the technology was used only for temp tracks and not the final product the situation raised alarms across the industry. This case remains a significant example of the legal battles surrounding artificial intelligence and actor protections.

‘Indiana Jones and the Dial of Destiny’ (2023)

The opening sequence featured a de aged Harrison Ford as a younger version of the famous archaeologist. To match the visual effects the sound department utilized AI tools to make the voice of the actor sound like it did in the early eighties. This process involved cleaning up old recordings and adjusting the pitch to remove the natural aging of the vocal cords in ‘Indiana Jones and the Dial of Destiny’. The result was a seamless transition that allowed the film to revisit a classic era of the franchise.

‘Coming 2 America’ (2021)

James Earl Jones returned to voice King Jaffe Joffer in this sequel decades after the original film was released. There were reports that digital assistance was used to maintain the strength and clarity of his iconic voice for the performance. This allowed the veteran actor to maintain the regal presence of the character despite his advanced age in ‘Coming 2 America’. The use of technology served as a bridge between the two films and ensured continuity for the audience.

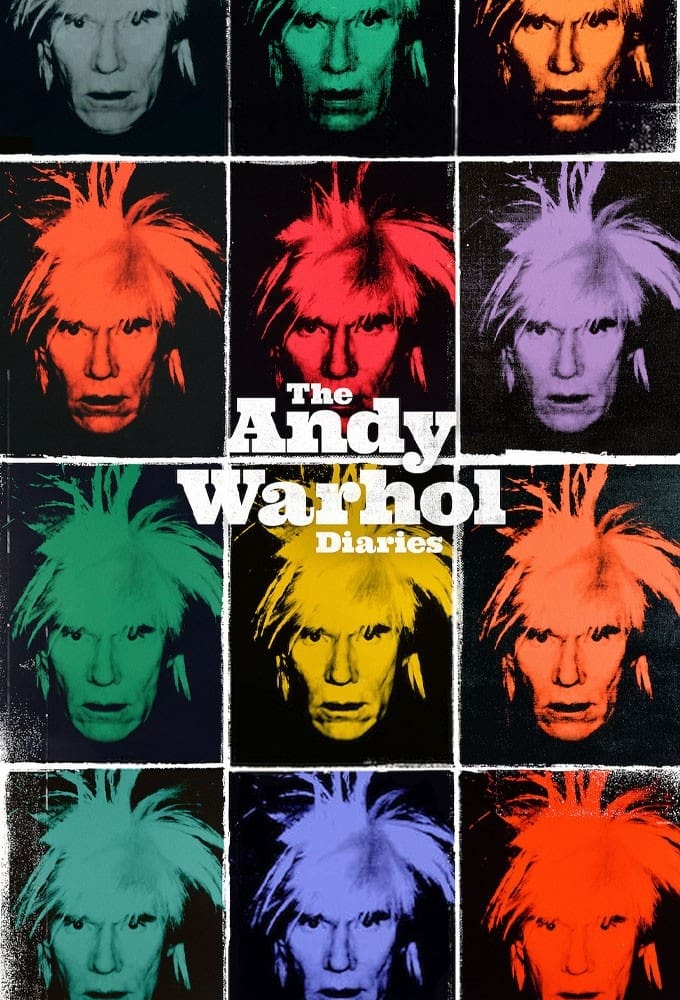

‘The Andy Warhol Diaries’ (2022)

This documentary series utilized artificial intelligence to recreate the voice of Andy Warhol to read his own private journals. The production team used software to analyze original recordings and generate a voice that captured the artist’s distinct flat delivery. While the creators of ‘The Andy Warhol Diaries’ argued that the technology allowed Warhol to tell his own story many critics questioned the ethics of synthesizing a deceased person’s voice for a narrative. This series showcased the potential for AI to bring historical figures back to life in a way that feels personal yet remains highly controversial.

‘Here’ (2024)

This film utilized advanced AI technology to de aged Tom Hanks and Robin Wright throughout different stages of their lives. The software allowed the actors to perform the roles themselves while the digital layer adjusted their appearances and voices in real time. This ambitious project in ‘Here’ aimed to tell a story spanning generations without the need for multiple sets of actors. The reliance on AI for the entire core of the film represents a major shift in how legacy stars are utilized in modern cinema.

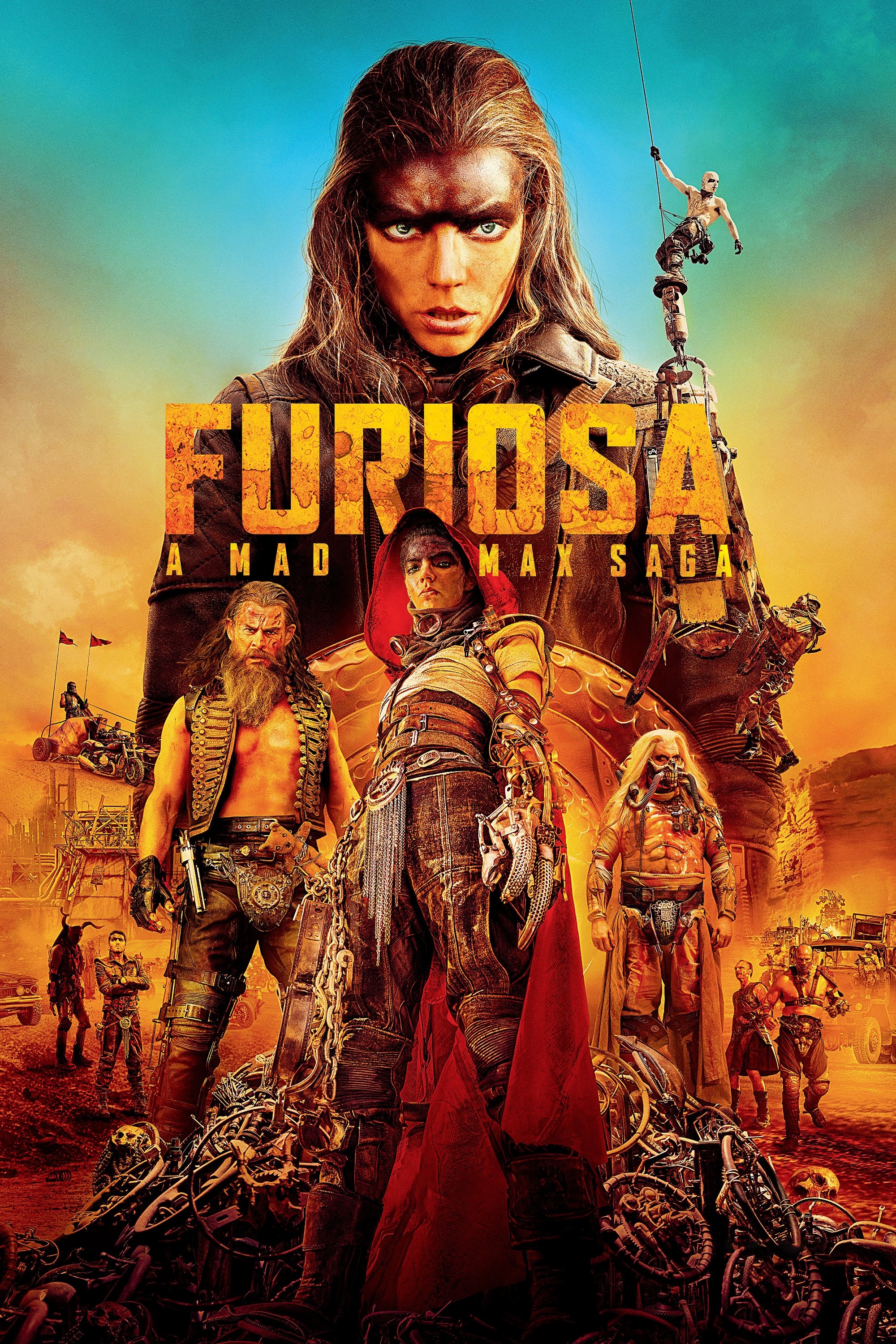

‘Furiosa: A Mad Max Saga’ (2024)

Director George Miller used AI voice blending technology to create a seamless transition between the younger and older versions of the titular character. The voices of Alyla Browne and Anya Taylor Joy were merged to ensure that the character sounded consistent as she aged in ‘Furiosa A Mad Max Saga’. This subtle use of the technology aimed to enhance the immersion of the story by providing a unified vocal identity. It demonstrated how AI can be used for artistic cohesion rather than just recreation.

‘Star Wars: The Rise of Skywalker’ (2019)

The production of this film faced the challenge of completing the story of General Leia Organa after the passing of Carrie Fisher. Filmmakers used unreleased footage from previous installments and digital tools to integrate the character into the new narrative for ‘The Rise of Skywalker’. Voice artists and archival audio were blended to create the dialogue for her final appearance in the saga. This process was intended as a tribute to the actress but sparked debate about the boundaries of digital recreation.

Please share your thoughts on the use of AI voices for legacy characters in the comments.